The 10 commandments to effectively test the limits of your web platform

1. Goals

Load testing is often seen as a way to determine if a platform can hold 500, 1,000 or 10,000 simultaneous users. It is actually not. To prove this kind of claim, the scenarios used during load tests would need to match the exact behaviour of real users flowing into the platform and using the service, which is realistically impossible to achieve.

Load testing should rather be considered as a tool that highlights the SPOFs (Single Point Of Failure), weaknesses, bottlenecks and breakpoints of the platform in order to know where we should focus our efforts in order to improve the efficiency and resiliency of the infrastructure or the application code.

These tests are also useful to measure the evolution of the platform’s performance as the infrastructure or application is updated.

Finally, combined with a metrology system, these tests can be used to effectively define the alert thresholds to be set up to anticipate performance degradations, or to find the indicators and thresholds to be monitored when setting up dynamic infrastructures with mechanisms such as autoscaling.

2. Tools

There is a good amount of tools available to perform stress tests and load testings.

At Iguana Solutions, we have a strong experience using Gatling and Octoperf (JMeter). No tools are better than the other and they all have their pros and cons.

We prefer tools with features adapted to our needs (easy creation of scenarios via the use of proxies, creation of standardized and easily interpretable reports, advanced customization and configuration, easy integration into the application), which allow us to implement a test plan defined beforehand.

A rigorous methodology also allows us to be able to re-use the whole to read, compare and understand the results and make syntheses understandable by all.

3. Scenario (Go for pragmatism over perfection)

As mentioned in the goals section, it is almost impossible to develop the perfect scenario that is going to predict and replicate the real behavior of your customers and/or users.

This is why we recommend developing multiple scenarios (between 3 or 5 depending on the complexity and depth of your service or application) that describe the best you can the supposed behavior of the users. Those scenarios can then be tested more or less heavily depending on their probability.

At Iguana Solutions, we work with a lot of online merchants. We usually advise them to create at least 3 basics scenarios:

- The first one simulating an unauthenticated user browsing the website, so we can test the different layers of cache;

- Another scenario where the user logs in, browses the articles, adds some products to his cart and finalizes the order;

- And finally a scenario where a user simply creates an account.

For all other industries or use-cases, and as a general note, we use our experience to define with our customers a list of pages, functions and transactions that consume a lot of the platform’s resources (calculation, memory, storage) in order to integrate them in the scenarios.

4. Use dynamic data (Think time and data)

The think time is the average time that a user is browsing a web page and thinking before performing the next action. On the platform side, it is materialized by a pause of variable duration between the different requests that arrive. It is essential to implement this parameter in your tests in order to make your scenario realistic and not overly aggressive towards your platform. Of course, we recommend randomizing the think time using a reasonable time bracket.

Most tools offer this feature by default, but the challenge is to find a realistic value for the think time according to your specific audience. To achieve this, you can rely on your regular web analytics tools such as Google Analytics, Fathom Analytics, Matomo … to check how long your real users stay on a page of your site before acting on it.

Finally, it can be useful to introduce in your tests some dynamic data (sending a form, a file, a data set) to anticipate these behaviors which often take up a lot of resources on the platform.

5. Reusability (Test Often, CI/CD)

One of the main goals of load testing is to know if a commit on your code or infrastructure leads to any performance loss. This is why it’s essential to include load testing inside your agile development process.

You can, for instance, after each push on the staging environment, trigger a load testing and compare the result with the previous push in order to raise a warning if the performances aren’t as good as before. This whole chain of events can be fully automated in your CI/CD pipeline.

It is essential to test often and this is also why we recommend you to start developing your scenarios early in the lifecycle of your application.

6. Metrology

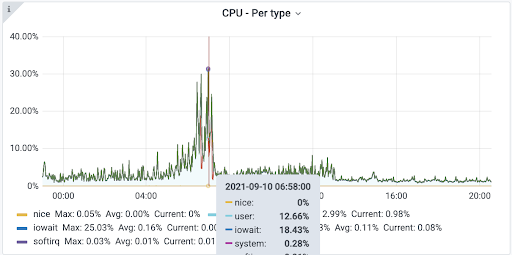

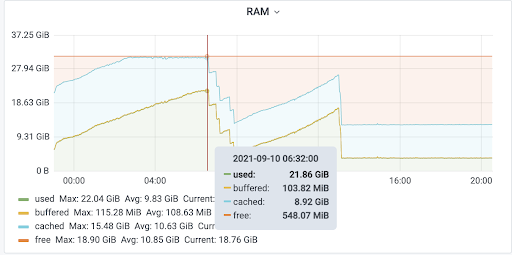

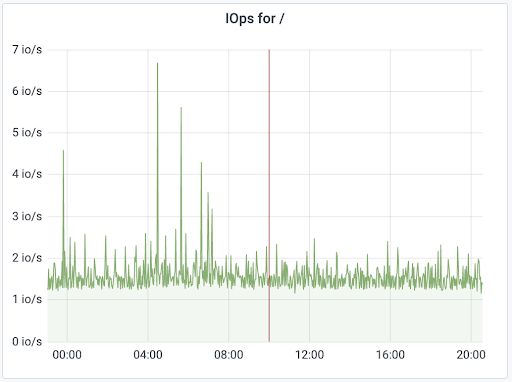

Metrology is essential when it comes to analysing the results of your tests. It allows you to quickly determine where the bottlenecks of your platform are by giving you detailed metrics on every service and part of your infrastructure.

Here at Iguana Solutions when performing load testing we make sure to measure hardware metrics such as the CPU, RAM, Disk, and Network usage of every component of the infrastructure.

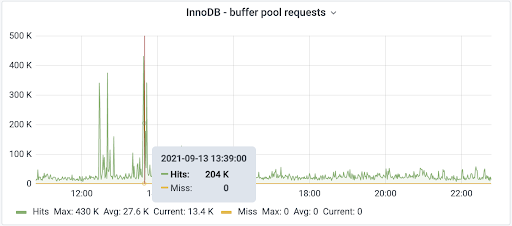

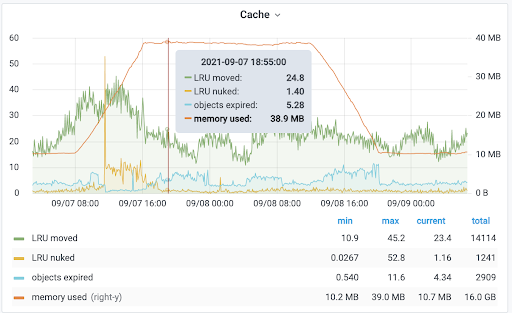

But also many middleware metrics for services such as Nginx, Apache, Varnish, HAproxy, Redis, MySQL…

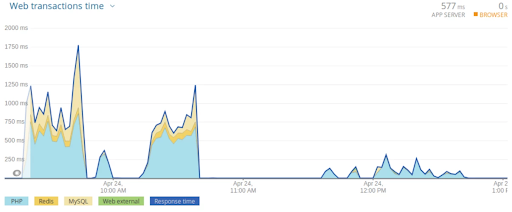

In addition to those metrics, we strongly recommend to use RUM (Real User Monitoring) and application profiling tools such as NewRelic, AppDynamics, Data Dog…

The goal, obviously, is to have a full picture of what is happening on the hardware, middleware and software to understand where you need to focus your efforts in order to be able to scale your platform more efficiently.

Tools such as Newrelic are very good at determining where the application spends most of its time. From our experience, looking at the time spent during database requests is always a good start for investigation and improving performances.

7. Staging/Production

At Iguana Solutions we always recommend and set up similar infrastructures for production and staging, usually with smaller compute capacities and somewhat reduced redundancy for the latter environment.

The main objective here is to guarantee that the application behaves in the same way in staging as it would in production, by using the same load balancing mechanisms, caching mechanisms etc.

However, even though we strongly recommend testing every deployment on the staging environment, we would also advise to schedule at least one weekly load test on your production platform, maybe at night or during a quiet period of activity.

8. Stress Testing vs Load Test

We think that it might be relevant to mention that there are actually 2 kinds of tests that you can perform: Stress Tests and Load Tests.

Both have different goals and consequences:

- Stress Test

The goal of a stress test usually is to take the platform to its absolute limits, in order to detect clear breakpoints. As the goal of a stress test is to completely overwhelm the platform, we don’t recommend performing them in a production environment. Instead, if you would like a clear idea of the limits of your production environment, we would advise to temporarily scale your staging environment to match your production capacity.

- Load Testing

Load tests are usually less tough on the platform. The goal here is to inject a reasonable amount of scenarios in order to observe how the platform and application reacts to ensure that the last code commit or infrastructure change didn’t impact the performances or scalability in a negative way.

9. Think out of the box

It is usually recommended to perform the loads or stress tests from an outside infrastructure. For instance at Iguana Solutions we always launch our tests from an AWS or GCP isolated environment, no matter where the platform we want to test is hosted.

This ensures that you test the capacity of your whole stack (including your capacity to handle incoming traffic coming from another network) instead of working from within your local network.

10. Going further

Through the use of load testing we saw how we could identify SPOFs (Single Point Of Failure), weaknesses, bottlenecks and breakpoints of a platform. But when you start the process of continuous improvement of your platform, it is also important to think about the web performance part on the frontend side.

Indeed the search engines like google, yahoo and bing naturally promote websites that respect and contain some performance features and methods. This is what we call SEO (Search Engine Optimization) and some of the quick wins are to optimize the size of your statics, compress using Gzip or brotli when possible, use HTTP2, execute non important Javascript at the end of the loading page, use the latest SSL ciphers etc..

We have at our disposal several tools which allow us to analyze, monitor and take actions accordingly. For instance, we use at Iguana Solutions GTmetrix or Webpage test.

These tools allow us to have a complete assessment of the webperf of our webpage and give us indications on the points to improve such as the cache retention rate, the size of the images or the order in which we should load some JS scripts.

Contact Iguana Solutions to find out more and tell us about your needs!