Dual AI Hardware Solutions: Powering Production and Fueling Innovation

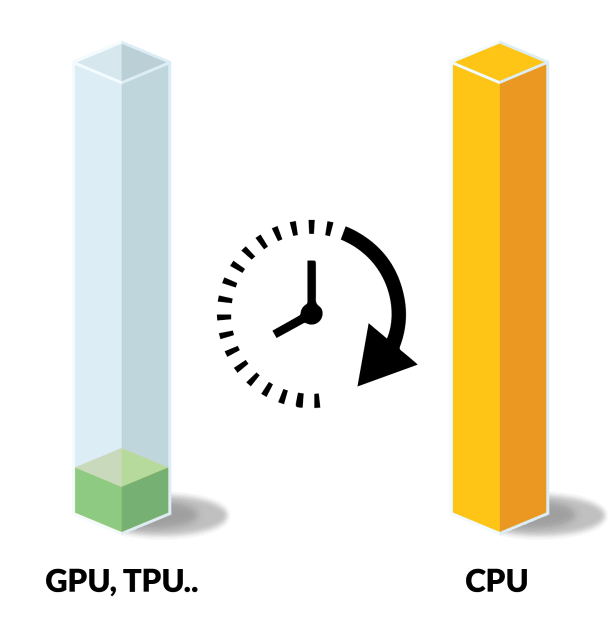

We offer two specialized General AI hardware infrastructures tailored for distinct needs: our Production-Ready solution and our R&D-Focused option. The Production-Ready infrastructure is engineered for maximum reliability and compliance, ideal for critical environments where uptime is crucial, such as in healthcare and finance. It is robust but comes at a higher cost. Conversely, our R&D-Focused infrastructure is more budget-friendly, designed for experimental and developmental purposes where full production compliance isn’t a necessity. This setup is perfect for organizations looking to innovate and test AI models economically, allowing them to scale their operations to a Production-Ready environment when needed.

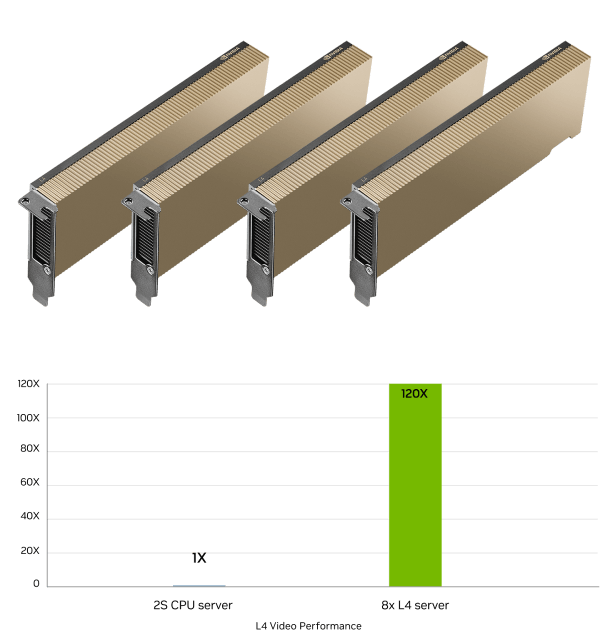

Powering Production

Designed for high-stakes environments, this infrastructure combines robust, high-performance configurations to ensure continuous, reliable operation. Ideal for critical sectors like healthcare and finance, it minimizes downtime risks despite its higher cost.

Fueling Innovation

This cost-effective R&D infrastructure supports innovation without the expense of full production compliance. It’s ideal for developing and testing AI models in pre-production phases, offering flexibility but not suited for all production requirements.